Hello @team, looking at the evaluation metric given on the challenge overview page, it appears that there is no penalty for incorrect predictions - is this correct? I hope not, because in that case, confidence scores of 1 for every prediction will maximise the score for correct predictions without incurring any penalty for wrong predictions.

There’s no penalty for incorrect predictions. The confidence score is never used directly as a weight - weights are assigned to predictions based on confidence scores, but they are normalized to guarantee that sum of weights for each sample equals 1.

Onward Team

OK, thanks for the clarification. So, if I understand right, confidence scores of 1, 0.8 and 0.2 would translate into weights of 0.5, 0.4 and 0.1, is that correct?

The weight mapping is confidental at this time, but you are correct that they are normalized so that they sum to 1. Here are a couple of resources will help you get started in designing your confidence metric:

Onward Team

Hi,

I am a bit confused with the metric as well.

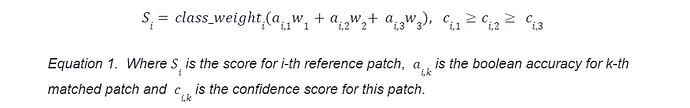

- In this formula what are w coefficients?

- c seems to be the confidence scores that we submit. Are they only used to sort 3 predictions for each query image? Are they somehow related to w or are they only used for ordering the predictions?

While we are here, I have another doubt as well. The formula says “ai,k is the boolean accuracy for k-th patch”. I interpret ‘boolean accuracy’ here to mean that each image in the query set will be considered to have exactly one correct match in the image corpus (e.g. Image 1 in the query set will only correspond to Image D in the image corpus, and any other suggestion will get a score of 0). Is this interpretation correct?

The w coefficients are normalized weights that are fixed global parameters of the scoring algorithm.

You’re correct - c are the confidence scores from your submissions. They are used to assign weights w to each prediction. They will are also used to calculate calibration metrics to decide who will be awarded the prize for the best calibrated confidence algorithm.

@ogneebho.mukhopadhya

The boolean accuracy here is defined with regards to binary classification problem: Does predicted image 1 from the image corpus belong to the same class as reference image A from the query set?

Each image in the query set has the minimum of three matches in the image corpus so that you can always get score of exactly class_weight for each reference patch.

Onward Team

Hi Onward Team,

Currently, your scorer on the backend allows submitting a single image as a 3 retrieval results. And obviously such solution has a better score than a correct one where all retrieved images are different. Please correct this, otherwise, people will abuse it.

Best,

Dmitry

Thank you for raising this issue for us, we have updated the scoring at this time.

Onward Team